ClevGuard Support: Monitor Devices with Others' Permission.

Imagine, one day you get a call from your child, and they sound distressed. They explain the emergency and ask for financial help to get out of the situation. It’s natural that you would help them. But what if it’s an AI voice cloning scam? At a time when AI can do almost everything, from creating symphonies to mimicking human emotions, scammers are using it to clone a loved one’s voice and siphon off people’s hard-earned money.

AI voice cloning scams are very common these days, and staying cautious is more crucial than ever. This guide details everything you need to know about this scam and what actions you must take to stay safe.

Table of Contents

Part 1. What Is AI Voice Cloning?

AI voice cloning is a process of using Artificial Intelligence (AI) to generate a synthetic copy of the human voice. It’s more than just voice recording, as it involves analyzing the voice patterns and reproducing a voice that can say anything. Some AI tools can create a voice that exactly mimics the original.

This technology is based on sophisticated AI and machine learning algorithms that collect large amounts of audio data from the target voice. It then processes and analyzes the data to understand the nuances, pitch, tone, and rhythm. And finally, the AI model uses this understanding to generate the same voice, which one can use to say things that the original speaker never did.

Part 2. Common Types of AI Voice Cloning Scams

As AI becomes more sophisticated, scams are becoming more prevalent every day. Scammers can obtain a few snippets of your voice from social media and clone it to scam your loved ones. Some common types of AI voice cloning scams are as follows:

1Accident or Blackmailing Scam

Scammers can use AI voice cloning to impersonate your child or any of your loved ones and create an emergency situation, whether an accident or a legal scenario. This makes it difficult for parents to question the legitimacy of the call, and they often end up paying funds via digital currency or wire transfer, which are difficult to retrieve.

One such incident happened in Dover, Florida, with Sharon Brightwell. On 9 July 2025, she received a phone call from an unknown number, but on the other side was her “daughter” in distress and crying. The voice mentioned that she hit a pregnant lady with a car and must pay immediate financial help to prevent any criminal charges. According to WFLA, Sharon became very scared, rushed to help, and sent $15,000 in cash via courier. There are plenty of similar incidents around the world.

2Virtual Kidnapping Scams

Another such imposter scam involves parents receiving a call from an unknown number. On the other side is their child, who appears to be crying, screaming, and in deep distress. Another person then comes up on the phone and pressures the parents into sending large ransom amounts. To further drive up the parents’ feelings of fear and despair, they might employ SIM jacking, where attackers gain access to the child’s phone number and redirect all calls from their phone.

In April 2023, an Arizona-based woman named Jennifer DeStefano reported that an anonymous caller said to have kidnapped her 15-year-old daughter and demanded a $1 million ransom. As per CNN, the impersonators threatened that they would drug and assault her child if she failed to pay up the amount. The matter was immediately reported to the police, who identified it as a common scam.

Another similar scam happened last year, where a woman named Robin received a call from an impersonator who claimed to have kidnapped her mother-in-law. According to The New Yorker, the fraudster threatened to kill her if he didn’t receive five hundred dollars to travel. After the investigation, the authorities identified it as a voice cloning scam, and Robin eventually got her money back from Venmo.

3Grandparent Scam

The grandparent scam is an AI voice cloning scam where criminals pose as a grandchild or another family member to persuade elderly victims into sending money. Here’s how a typical grandparent scam starts: the scammers impersonate the grandchild, and they are in distress, claiming they’re in an emergency, whether an accident, in trouble with the law, or kidnapped. The calls are often made at midnight when they aren’t fully awake, and it is difficult for them to judge the authenticity.

The instances of such scams are ever-increasing. A report released by the United States Attorney’s Office revealed that around 13 individuals were arrested in connection with a grandparent scam. Over the years, they tricked hundreds of elderly people into believing that their grandchild or family member was in trouble. In total, there were over 400 victims above the age of 84 years with more than $5 million in losses.

Part 3. How Scammers Get Your Voice?

Now that you have learned what scammers can do with your voice, understanding how they get your voice is crucial to protecting yourself and your family members. Well, one way is the one that’s mentioned in the previous section, where scammers crawl into your kids’ social media accounts for voice recordings.

Another way is by calling you and recording your voice when you speak. Even saying as little as “Hello, who is this?” is enough to capture your voice and clone it. It’s thereby crucial to steer away from picking up calls from unknown numbers.

Part 4. Preventive Steps for Kids and Parents

While AI voice scams may sound intimidating, the good news is that there are preventive steps to keep yourself and your kids safe. Here are some tips that you must follow:

Don’t Pick Up Calls from Unknown Numbers

The simplest way to stay safe from AI voice cloning scams is to not answer any calls from unknown numbers. Let the calls go into the voicemails, listen to them, and then decide if the calls need answering or not. This way, you aren’t providing your or your kids’ voice for scammers to clone or falling prey to any scam calls.

Verify Information

If you pick up a call, verify the information they provide before paying them anything. If it’s your child on the other side, call them back or contact any of their friends to confirm the story.

If it’s a political call, like the AI calls mimicking Joe Biden that urged people not to cast ballots in the 2024 election, do the homework first. Try to contact any reputable source and ask for confirmations regarding the calls. In case the impersonator asks for a donation to a cause, ensure the deal is reputable and has a verifiable presence. Ask your child to always share such calls with you and discuss before making any payments or sharing any personal information.

Don’t Overshare on Social Media

Scammers can crawl into your social media account to retrieve your voice notes and make a clone. Even a short video of you and your child can be enough to create a copy of the voice. So, keep the posts and personal information private and visible only to your friends and family.

Use Parental Control Software

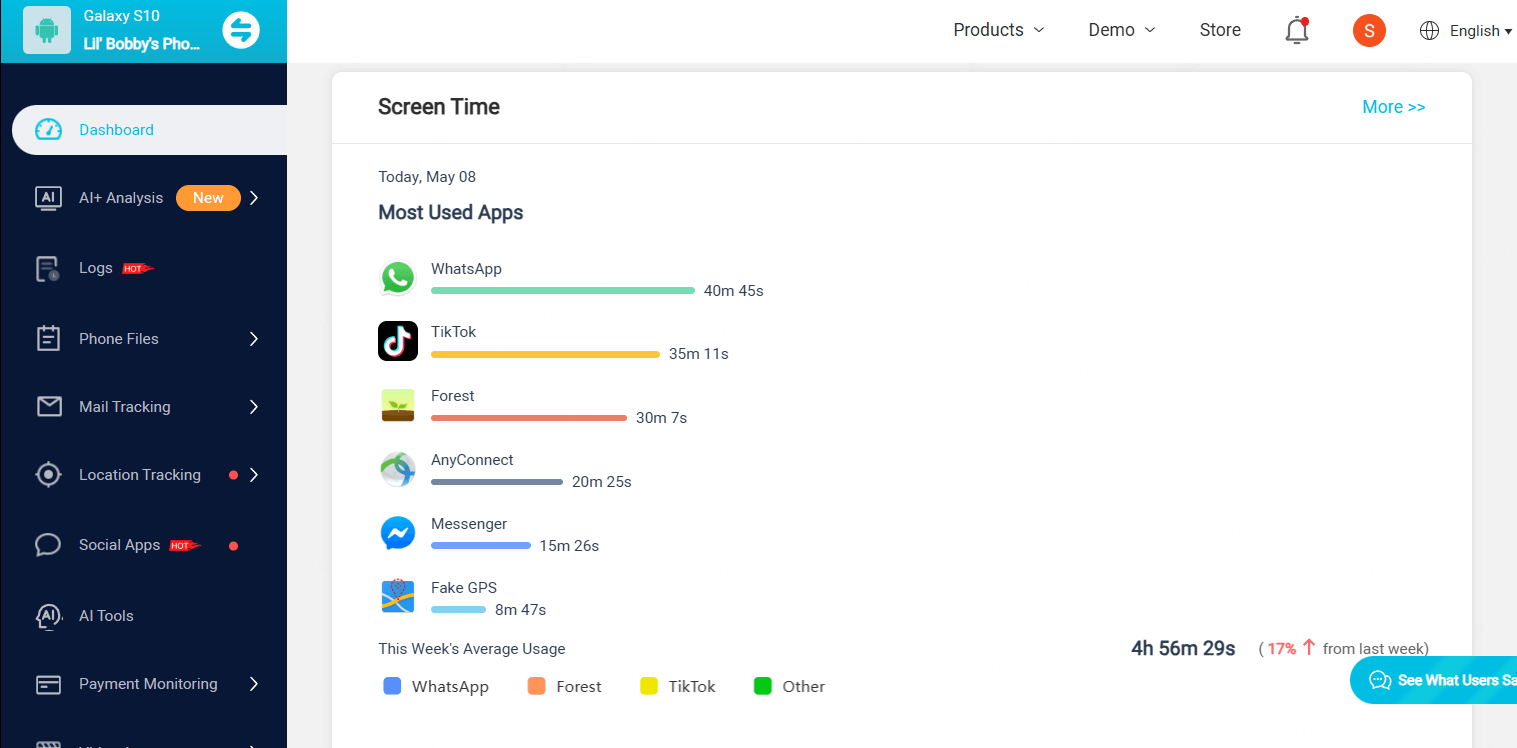

Kids might not be vigilant enough to look for red flags and spot an AI voice scam, so you must keep an eye on them. Parental control software like KidsGuard Pro can be handy in this respect. This comprehensive parental control app has a simple interface, is easy to install, and is loaded with features.

For one, you can monitor your kids’ smartphones in real time so you know with whom they are interacting on phone, SMS, and social media. It lets you take screenshots and obtain call recordings to share with legal authorities in case of any scams. Not only that, but it also provides a detailed insight into your kids’ social media activities, including their conversations, posts, and other activities. You can time limit or block any apps, if needed.

Report Scams

If you encounter any AI voice cloning scams, report them immediately to the relevant authorities. You can visit the Federal Trade Commission to report and find out the next steps.

FAQs about AI Voice Cloning Scams

Can AI voice clones fool bank voice authentication?

Yes, voice cloning is accurate enough to fool bank voice authentication, and criminals can use it to take money from your account. This has forced many banks and financial services to rethink the use of voice authentication.

How much audio does a scammer need to clone my voice?

A scammer might only need three seconds to clone your voice. Even a short-duration call or a few seconds of social media videos are sufficient. It’s therefore recommended not to pick up calls from an unknown number.

What immediate steps should I take if someone clones my voice?

If you find out that someone has cloned your voice, you should immediately report the activity to the Federal Trade Commission (FTC) and your state attorney general. You must be skeptical about these calls, especially those involving urgent requests for money or personal information.

Are there tools to detect cloned audio?

Yes, there are tools to detect cloned audio. You can use tools like the McAfee Deepfake detector to identify the AI-generated deepfakes, including audio clones. Or you can use comprehensive parental controls to retrieve your child’s call recordings and detect whether they use cloned audio or not. More tools might be developed in the future, so you must be informed about the advancements in security technology and use them to bolster your defense.

Conclusion

AI voice cloning scams are a serious threat nowadays and can cause substantial financial losses, compromise personal and sensitive information, and inflict emotional and psychological trauma on victims. It’s therefore crucial to stay vigilant and protect yourself and your kids from falling for these deceptive tactics. Children can be a major target of scammers, as they are not yet mature enough to detect the scams. So, use comprehensive parental control tools like KidsGuard Pro to keep an eye on your kids’ online activities, including who they’re communicating with and their social media activities.